5.16.2012

Tunnel Vision

I spent a few hours this past week in earnest porting the strategies and making them work, adding new features to the common framework in support of the strategies, etc. It was only once the first one was running that I begun to wonder -- what in the world is this strategy attempting to do?

While I do like to think I do an honest job of commenting my code, it could always be improved. However the worst part of trying to port the code was the fact I failed to comment (aka, in simple concise English) what the purpose of the strategy was! This omission found in my earliest work was noticed and rectified at some point, but this strategy pre-dated that.

Desperately I searched through my revision history to find an older version that was perhaps simpler and more likely to be understood. Looking at the old version (before porting) did answer my question, but not in a good way. I now understood what the strategy was doing, but I hadn't a clue as to why. It simply made no sense at all.

This sent my head spinning -- I opened up some of the current strategies and although successful, they made no sense to me. I've simply had tunnel vision for too long.

Here I have been porting these things to run in the new and improved layer (making coding a strategy much simpler), but now I have no idea what the point and purpose of the strategies I have running and/or am porting is. I still have the idea of the general concepts of strategies I wish to employ -- some that should perform better in trending versus ranged markets, etc. However, the execution and implementation of those strategies seems to have gotten lost in the focus of coding the framework and making things robust. Time to sharpen my pencils and step back for a minute.

I realize I haven't looked at a chart, I mean really looked at a chart in a long time. Far too long.

2.08.2012

Price Feed Success

1.28.2012

Price Feed Failures

Due to programming changes I made in separating out the individual bots from the price feeds, the bots themselves are completely unaffected by the price feed failure, at least from a technical standpoint. From a practical standpoint, they will not be getting any updated pricing information, and will therefore not be able to properly manage trades. I think the next step here is to implement some more automatic failovers to potentially other datafeeds if necessary. I have found a couple potential feeds that can work as a failover in a pinch -- the question is would you rather have data that is potentially 10 pips or more off your brokers feed, or no data at all?

In most cases (depending on the strategy) I think having a general idea of where the price is, even if it's a bit off, is better than sitting blind. If we execute trades on the prices generated from the failover feed, we'll chalk up the execution price against the opening price as slippage anyway.

Automatically failing over, and then rotating back to the real feed at some point will be a technical brain teaser to solve. The most interesting piece will be making the transitions transparent to the strategies using the feed. However, I also need to bulk up my alerting system so I am aware of what's going on as needed. This will likely consist of getting email alerts sent to my phone again. As it stands, if I can get the email, I can login and respond to whatever issue may be occurring. Last but not least, I need to figure out why the feed is failing and if possible fix it.

I started what is now a half finished debugging session almost a week ago. There has been with no movement on my end to finish it. The potential for some better trading should hopefully be motivation enough to take a serious look and overhaul the alerting, failover and reporting systems I'm currently using. The importance of this infrastructure can't be understated. Now to find the time to implement.

11.17.2011

Trading engine update

Sucess! As of this evening I have a live version of the new strategy engine running a breakout strategy in demo. Porting the old strategies over and refining the engine will likely continue for several weeks. I'm quite happy with how things turned out, but there is always more to do. I'm sure this will not be the last update to the strategy engine.

For the technical minded, let's just say hashes are really neat data structures, and without them this consolidation would not have been possible. ;-)

11.10.2011

Updating the trading framework

It's been some time since I spoke about the engine behind the robots and strategies I trade. I had a wonderful coding marathon last weekend the result of which is many new improvements in the trading framework I am using. Although I solved several issues and cleaned up many things, there remains a piece I haven't quite been able to clean up.

I have the dilemma of writing code that is clean and maintainable, while having client side strategies that are really one-off programs. The server side backend pieces are abstracted away and objectified nicely, but that doesn't help with the client side implementations. Simply stated, I have a template I wish to use for all new strategies. This is easy to do. The trouble comes when I update the template (fixing a bug, adding new cool stuff, etc), the strategies of course don't get this update without me manually merging it across. This is not scalable as the number of strategies grows. The trouble with creating a generic class and having each strategy extend it is that each strategy has unique pieces and I don't believe I can abstract anymore pieces away. This is of course despite the fact each has a common workflow. Creating a generic client class is the answer (unless a programmer out there can shed some light). Regardless this is a wall that is slowing progress on new trading ideas.

3.29.2011

Living in the cloud

Up until this point I have been self-hosted. I kept and maintained my own subversion server, in addition to the development workstation and application server for the bots to run on. And I had to keep those boxes plugged in and connected to the internet at all times. I remember coding the extensive disconnect logic for the (almost) daily disconnects that occurred at the one location I had the server running. Moving really threw a wrench into those plans. I had trouble keeping them plugged in and powered on, let alone connected to a high speed and stable connection.

When the time came for an extended trip, it was an easy decision to also give the bots a break. I did this mostly for myself, to allow my brain to focus on other tasks, but also to avoid having to deal with the server management while physically located thousands of miles away. They really have always belonged on a managed server. As of this past weekend, that's been corrected. I went with amazon ec2 -- it's completely scalable (and priced accordingly). The uptime, bandwidth and price cannot be beat. In fact, combined with wireless data on my android phone, I can admin the bots from anywhere. Having them running somewhere else and happily churning along is actually quite freeing. I check in once a day and look at the P/L's. So far, so good. I'm in love with the cloud. Now I need to move the subversion server also and everything will be migrated from my box.

4.14.2010

Bugs be gone!

Anyways, this obviously causes issues when attempting to trade multiple timeframes, strategies, or hedge in a single account. I've gotten around that by using sub accounts, and seamlessly tying them together. Now then, calculating an average position and price is easy when all the positions are in the same direction. It's not quite so easy when you want an average position of your portfolio of positions! My objective here is too avoid calculating the PL of the portfolio on every iteration. I posted about my work earlier. Getting the average position consists of adding up and dividing out the long and short positions to arrive at a net price, units and directions. The trouble comes in when you then try and utilize this "net" position to calculate your PL to exchange rate ratio. In other words, how much profit do I see with each pip? From there it would seem easy enough to predict your profit based on price movements. This is were the trouble seems to be occurring. Given enough positions this profit calculation seems to no longer work. If the math is right, blame the client! I guess my bug hunting will be focused on the client side now.

3.31.2010

Framework Optimizations Complete

I would now in fact be focusing on testing and vetting the strategy for use, except for the fact my main machine is down for the count. This fact wouldn't be a huge issue if my SVN server didn't also happen to be running on it during this interim period. Right, I know what your thinking. Git is SO much cooler than SVN. I agree. However, there is little reason to migrate at this point, given the other pressing issues. After all, I don't have easy access to the code; let alone migrate it.

3.26.2010

Of Execution

Pricefeed

This process grabs and stores realtime forex price data from Oanda. Currently, I don't have the disk space nor huge desire for long term storage, so I'm writing to csv files on a weekly basis for now. Given the data is available from Oanda with a 2 month rolling delay, there's little need for collection when dealing with the major pair, outside of verifying strategy execution.

Monitor

I monitor all strategies to ensure they are running, and have alerts based upon there actions. I will be alerted if a strategy dies, or if the PL encounters a drastic change, etc. These alerts are sent via email and SMS to my cell phone.

Strategy

Finally, each strategy itself will be running inside it's own process.

Analysis

This I will run from time to time to perform analysis on any strategy log; whether running in live mode or backtesting mode. Essentially it does things like produce statistics of trades, and graphs things like balance, PL, trade timespan, etc.

Framework Optimizations

My typical strategy shell as it were has certainly evolved over the course of coding. It never seems to stay up to date, a I continue to add to the framework, and realign my thinking and strategy code. The latest set of optimizations are basic in thought, but slightly harder than first realized to implement. Up until this point laziness combined with several other factors has led to an abuse of running a calcPL() command1. Because of design overlap and poor coding, sometimes this was being calculated 2 or even 3 times per iteration. Obviously this is burning CPU cycles, but more importantly, it's taking valuable time away from strategy analysis and price feed updates. And of course, it's also why backtesting has slowed from "instantaneous" results to taking 10 minutes.

Despite knowing the issue, I decided to take the opportunity to profile my code for the first time. Profiling2 quickly pointed out the problem as well. Several hundred million calcPL() calls made for only a few hundred trades.

Rectifying this CPU waste has been a challenge. The ultimate goal is to completely remove any redundant calls to calcPL(), and instead allow the strategy to "know" when it needs to execute something. Obviously this means more upfront calculation and tracking, as well as storing the data. To the extent it makes sense, the objects have gained additional attributes that will store much of the data. Some of it will remain inside the client. Of course, this is only a first pass, and will likely see its own enhancements as time goes on.

The end result should create very fast iterations while running the strategy. Essentially the new processing order for each iteration will be as follows:

Get the exchange rate

Simple check to see if we need to act

Currently there is both a calcPL() layer and an analysis layer sandwiched in-between those steps. If your strategy can be re-written to fit the simple check model as above, even your smartphone could run it :-)

1. I also wanted to have realtime data of my PL. Instead of pursuing the proper solution, until now I've optimized the calcPL() call. To get this realtime PL data under the new model, there are several approaches one could take. Before coding much of the framework, I had a separate monitor process that also tracked PL. Separation is an easy and wise solution, as you can both monitor and perform additional analysis with it, and gain the separate process stability.

2. I used Devel::NYTProf and got a cool html graph output. CPAN makes things easy, so don't put off profiling for as long as I did.

2.25.2010

Of Backtesting

That's not to say backtesting has no place. As I said, it helps to reveal bugs, and can identify or disprove your thesis on the core of the strategy. For example, if I code a trend following system, I would want to backtest it on a dataset containing trends. This will help me to see if my idea on how to idenitfy a trend is correct (and correctly coded). The performance of the robot on this dataset is a secondary indicator, but again is most useful for determining proper code. If a robot wipes the account of otherwise experiences severe drawdown my strategy doesn't call for, it usually means there is a bug somewhere. Typically you can no more always be wrong, than always be right. Either one is a cause for investigation. Or you found the grail. Heh. My money is on a bug!

Of Frameworks

While there are many packages of there to support such endeavors, I have written my own framework to accomplish this. In a nutshell, I have separated out the execution layer, data layer and logic layers of a trading program. The execution layer takes care of the communication and physical trade execution at my broker. The data layer is separated out to render feeds of price and other information for use by any particular strategy I am running. Finally the logic layer is separated out into individual strategies running within the framework.

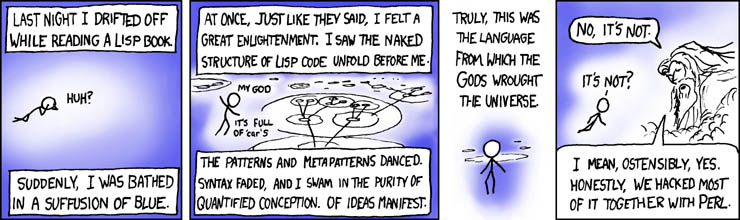

It sounds much more complicated than it really is. But having this proper framework allows me to code strategies easily; and then run them thru backtesting or live mode. I suppose I would be remiss if I didn't also mention I did this all in PERL.